Machine learning demands precise programming to achieve optimal results, as accuracy in code ensures better model performance, minimizes errors, and enhances predictive accuracy.

To harness its full potential, precise programming is essential. Machine learning algorithms learn from data and make decisions. But they need correct instructions to function well. Precise programming ensures the algorithms produce accurate results. Without it, the system may fail to understand the data.

This can lead to wrong conclusions and poor performance. Programmers must carefully code and test their algorithms. They must also consider various scenarios and edge cases. Accurate coding and thorough testing help build reliable machine-learning models. This blog will explore the importance of precision in machine learning programming. We will discuss how to achieve desired outcomes through meticulous coding practices.

Importance Of Precision

Machine learning is a fascinating field that has transformed many industries. But achieving the desired results requires precise programming. Precision is not just a technical requirement; it is the backbone of effective machine learning.

Role In Machine Learning

Precision in programming plays a crucial role in machine learning. A small error in the code can lead to incorrect predictions. This is especially true in complex models where data accuracy is vital. For example, consider a model predicting medical conditions. Even minor inaccuracies can lead to wrong diagnoses. Therefore, programmers must ensure every line of code is correct.

Impact On Results

The impact of precision on results is significant. Accurate programming ensures models perform as expected. Here are a few points highlighting this impact:

- Improved Accuracy: Precise programming improves the model’s accuracy.

- Reliable Predictions: It ensures the predictions are reliable.

- Reduced Errors: It minimizes errors in the final output.

Let’s look at a table that shows the correlation between precision and outcomes:

| Precision Level | Model Accuracy | Error Rate |

|---|---|---|

| High | 95% | 5% |

| Medium | 80% | 20% |

| Low | 60% | 40% |

As shown, higher precision leads to better model accuracy. This, in turn, reduces the error rate. Precision is not just about accuracy; it is about trust. Users trust models that consistently produce reliable results.

In summary, precision is essential in machine learning. It affects both the role and impact of the models. Ensuring precise programming is the key to successful machine learning applications.

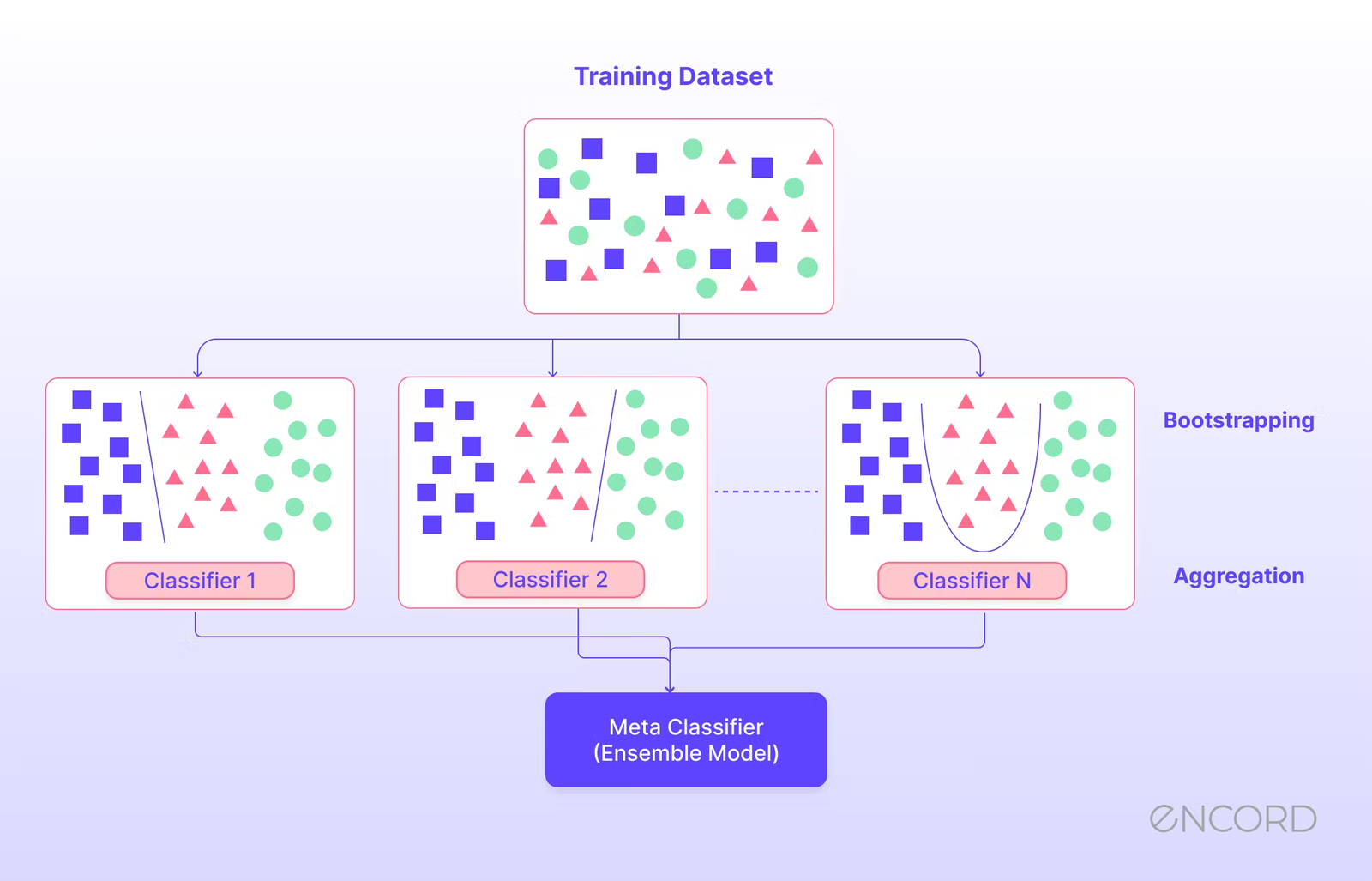

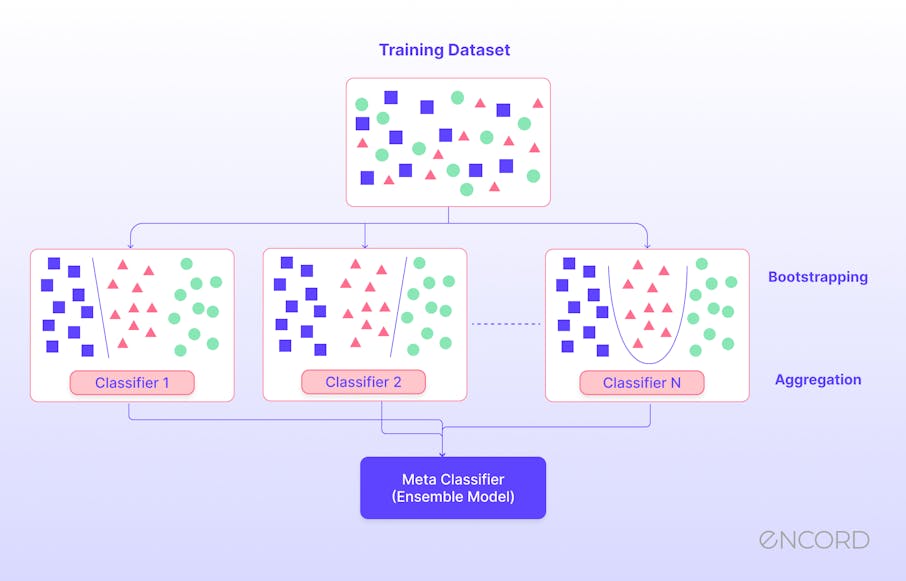

Credit: encord.com

Key Programming Concepts

Machine learning needs precise programming to get good results. Some key concepts are crucial. These include algorithms and data structures. Understanding these concepts can help you build better models.

Algorithms

Algorithms are the heart of machine learning. They are the step-by-step rules for solving problems. Common algorithms include:

- Linear Regression – predicts a value based on past data.

- Decision Trees – makes decisions by breaking down data into smaller parts.

- K-Nearest Neighbors (KNN) – classifies data based on the closest data points.

Each algorithm has its own strengths and weaknesses. Choosing the right one is key to success.

Data Structures

Data structures store and organize data efficiently. They impact the performance of machine learning models. Common data structures are:

- Arrays – store data in a list format.

- Linked Lists – store data with nodes connected by pointers.

- Hash Tables – store data with key-value pairs for fast retrieval.

Good data structures can speed up your algorithms. They help manage large datasets effectively.

| Algorithm | Use Case |

|---|---|

| Linear Regression | Predicting house prices |

| Decision Trees | Classifying emails as spam or not |

| KNN | Recommending products |

Data Preprocessing

Data preprocessing is a crucial step in machine learning. It involves preparing raw data for analysis. This step ensures the data is clean and suitable for the model. Proper preprocessing can improve the accuracy of the model significantly.

Data Cleaning

Data cleaning is the first step in preprocessing. It involves removing or correcting inaccurate records. This step ensures the data quality is high. Clean data leads to better model performance. Common tasks in data cleaning include:

- Removing duplicates

- Handling missing values

- Correcting errors

Handling missing values is critical. You can either remove them or fill them with a default value. Removing duplicates prevents redundant data from skewing results. Correcting errors involves fixing typos or incorrect entries.

Feature Engineering

Feature engineering is the next step. It involves creating new features from existing data. These new features can help the model learn better. Good features can significantly improve model performance. Key activities in feature engineering include:

- Scaling and normalization

- Creating interaction features

- Encoding categorical variables

Scaling and normalization adjust the range of data. This helps the model to learn more effectively. Creating interaction features involves combining existing features. This can uncover hidden patterns in the data. Encoding categorical variables converts text data into numerical data. This is essential for most machine learning models.

Model Selection

Choosing the right model is crucial in machine learning. It determines the accuracy and effectiveness of your solution. A well-chosen model can make the difference between success and failure. This process involves careful consideration of various factors.

Choosing Algorithms

Selecting the proper algorithm is essential. Each algorithm has strengths and weaknesses. Some work well with small datasets. Others perform better with large, complex data. Here are some common algorithms:

- Linear Regression: Suitable for predicting numeric values.

- Decision Trees: Good for classification tasks.

- Support Vector Machines: Effective for high-dimensional spaces.

- Neural Networks: Ideal for deep learning tasks.

Consider the nature of your data. Understand the problem you are solving. Match the algorithm to your specific needs.

Evaluation Metrics

Once you select an algorithm, evaluate its performance. Use metrics to measure effectiveness. Common metrics include:

- Accuracy: The ratio of correct predictions to total predictions.

- Precision: The ratio of true positive results to all positive results.

- Recall: The ratio of true positive results to all actual positives.

- F1 Score: The harmonic mean of precision and recall.

Choose metrics that align with your goals. For example, if false positives are costly, prioritize precision. If capturing all positive cases is crucial, focus on recall.

Evaluate your model using these metrics. Adjust as needed to achieve the best results.

Training Models

Training models is a core aspect of machine learning. It involves feeding data into algorithms to teach them how to make decisions or predictions. This process is crucial for achieving accurate results. Proper training ensures the model learns the right patterns from the data. Let’s dive into two essential parts of training models: Optimization Techniques and Hyperparameter Tuning.

Optimization Techniques

Optimization techniques are methods used to adjust the model’s parameters. These techniques help in minimizing errors and improving performance. Common optimization techniques include:

- Gradient Descent: Adjusts parameters to reduce the error rate.

- Stochastic Gradient Descent (SGD): Uses random samples to update parameters.

- Mini-batch Gradient Descent: Combines the benefits of both gradient descent and SGD.

- Adam Optimizer: Combines the advantages of other optimization methods.

Choosing the right optimization technique is vital. It affects the speed and accuracy of the model training process. Each method has its strengths and weaknesses. Selecting the appropriate one depends on the specific problem and dataset.

Hyperparameter Tuning

Hyperparameters are settings that control the learning process. They are not learned from the data. Instead, they must be set before training begins. Examples of hyperparameters include:

- Learning Rate: Determines how quickly the model adapts to new data.

- Batch Size: Number of data samples processed before updating the model.

- Number of Epochs: Times the entire dataset is used to train the model.

- Regularization Rate: Prevents overfitting by adding penalties to the model.

Finding the right hyperparameters is a challenging task. It often involves trial and error. Some common techniques for hyperparameter tuning are:

- Grid Search: Tries all possible combinations of hyperparameters.

- Random Search: Samples random combinations of hyperparameters.

- Bayesian Optimization: Uses probability to find the best hyperparameters.

Effective hyperparameter tuning can significantly improve model performance. It can be the difference between a good and an excellent model.

Avoiding Overfitting

Machine learning models need precise programming to deliver accurate results. One of the biggest challenges is avoiding overfitting. Overfitting occurs when a model learns the training data too well, including noise and outliers. This leads to poor performance on new, unseen data. To tackle overfitting, various techniques can be applied.

Regularization Methods

Regularization methods help prevent overfitting by adding a penalty for larger coefficients in the model. This keeps the model simpler and more generalizable.

- L1 Regularization (Lasso): Adds the absolute value of coefficients as a penalty term to the loss function.

- L2 Regularization (Ridge): Adds the squared value of coefficients as a penalty term to the loss function.

- Elastic Net: Combines L1 and L2 regularization methods for a balanced approach.

Using these methods, the model avoids fitting too closely to the training data, resulting in better performance on new data.

Cross-validation

Cross-validation is another effective technique to avoid overfitting. It involves splitting the dataset into multiple subsets and training the model on different combinations of these subsets.

A popular method is K-Fold Cross-Validation, where the data is divided into K subsets. The model is trained on K-1 subsets and tested on the remaining subset. This process is repeated K times, ensuring each subset is used as a test set once.

- Split the dataset into K subsets.

- Train the model on K-1 subsets.

- Test the model on the remaining subset.

- Repeat the process K times.

- Average the performance across all K trials.

This technique helps in getting a better estimate of the model’s performance on unseen data and reduces the risk of overfitting.

| Technique | Purpose |

|---|---|

| Regularization | Penalizes larger coefficients to keep the model simpler. |

| Cross-Validation | Provides a better estimate of model performance on unseen data. |

By using these techniques, you can ensure your machine learning model performs well on new data, achieving the desired results.

Deployment Challenges

Deploying machine learning models is not easy. It involves many challenges. These challenges affect the performance and accuracy of the models.

Scalability Issues

Scalability is a major concern in machine learning deployment. As data grows, models must handle larger datasets efficiently. Many models struggle with this. They slow down or produce errors.

To address scalability, use distributed computing. This approach divides tasks across multiple machines. Cloud services, like AWS and Azure, offer scalable solutions. They enable models to process vast amounts of data quickly.

| Scalability Solutions | Benefits |

|---|---|

| Distributed Computing | Handles large datasets effectively |

| Cloud Services | Provides on-demand resources |

| Load Balancing | Ensures even distribution of tasks |

Real-time Processing

Real-time processing is crucial for many applications. It requires models to deliver results instantly. Examples include fraud detection and recommendation systems.

Achieving real-time processing is difficult. It demands high computational power and efficient algorithms.

Use optimized algorithms and hardware accelerators, like GPUs, to achieve real-time processing.

- Optimized Algorithms: Improve processing speed

- GPUs: Boost computational power

Real-time processing also requires low-latency networks. This ensures quick data transfer between systems.

Future Of Precision In ML

The future of precision in machine learning (ML) is exciting and promising. As ML evolves, the need for precise programming becomes more crucial. This ensures that ML models deliver the desired results. Let’s explore some emerging trends and innovative tools shaping this future.

Emerging Trends

Emerging trends in ML focus on improving accuracy and precision. One key trend is AutoML. AutoML automates the selection of models and hyperparameters. This helps in creating more accurate models quickly. Another trend is Explainable AI. Explainable AI ensures that ML models are transparent. This builds trust and allows for better debugging. Federated Learning is also gaining traction. Federated Learning trains models across multiple devices without sharing data. This improves privacy and precision.

Innovative Tools

Several innovative tools are helping to achieve precision in ML. TensorFlow is a popular tool for building and training ML models. It offers flexibility and scalability. PyTorch is another powerful tool. PyTorch is known for its dynamic computation graph. This makes it easier to debug and optimize models. Keras provides a user-friendly interface for building neural networks. It simplifies the process of creating deep learning models. Scikit-learn is ideal for simple and efficient data analysis. It supports various ML algorithms and is easy to use.

| Tool | Key Feature |

|---|---|

| TensorFlow | Flexibility and scalability |

| PyTorch | Dynamic computation graph |

| Keras | User-friendly interface |

| Scikit-learn | Simple and efficient data analysis |

These tools and trends make it easier to achieve precision in ML. They help in building robust models that deliver accurate results. As we move forward, the focus on precision will continue to grow. This will lead to more reliable and trustworthy ML applications.

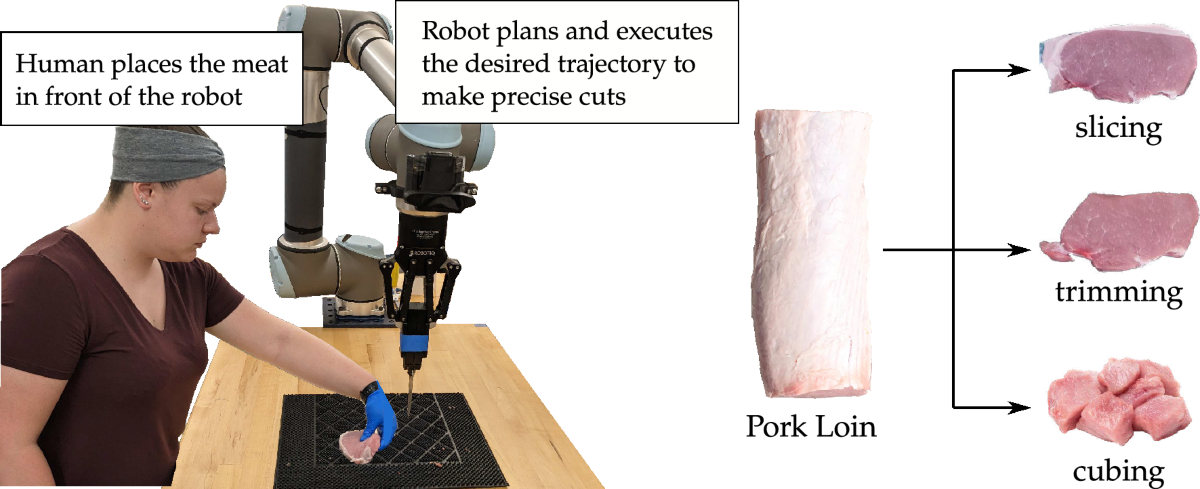

Credit: www.nature.com

Frequently Asked Questions

What Is Precise Programming In Machine Learning?

Precise programming in machine learning involves writing code with exact instructions. This ensures the model functions correctly. It minimizes errors and enhances performance.

Why Is Precision Important In Machine Learning?

Precision is crucial because machine learning models rely on accurate data processing. Errors can lead to incorrect results. Precision ensures reliability and efficiency.

How Does Programming Affect Machine Learning Results?

Programming directly impacts machine learning outcomes. Well-written code ensures accurate data handling. It optimizes the model’s performance and accuracy.

Can Machine Learning Succeed Without Precise Programming?

No, imprecise programming leads to errors. This affects the model’s accuracy and reliability. Precision is essential for successful machine learning.

Conclusion

Precise programming in machine learning is crucial for success. Clear code ensures accuracy. Consistent testing helps refine algorithms. Proper data handling reduces errors. Small mistakes can lead to big problems. Attention to detail is key. Learning and adapting are part of the process.

Machine learning thrives on precision. Achieve great results with careful programming. Keep improving and stay updated.